Robots Are Collaborators, Not Competition

New Center for Robotics and Biosystems builds on and strengthens Northwestern’s longstanding leadership in collaborative robotics.

Robots are not coming to take our jobs, control our lives, or make human beings obsolete.

They’re here to work with us and to help us be our best selves— at work and at home—whether we’re assembling an automobile or recovering from a severe injury.

That vision of a future where humans and robots work seamlessly together is the goal of the new Center for Robotics and Biosystems, Northwestern Engineering’s hub for robotics research.

Within a newly expanded 12,000-square-foot space in the Technological Institute that opened this fall, faculty from across disciplines study the science and engineering of embodied intelligence and how it can advance efforts in everything from space exploration to medicine.

“Some people worry about our future with robots,” says Kevin Lynch, professor of mechanical engineering and director of the center. “Certainly there will be a changing employment landscape and the potential for misuse of the technology. But advanced robotics will bring countless benefits to our economy, health, and quality of life. Our future is one of human-robot co-evolution, and the center is working to make that future as beneficial to humanity as possible.”

A Collaborative Future

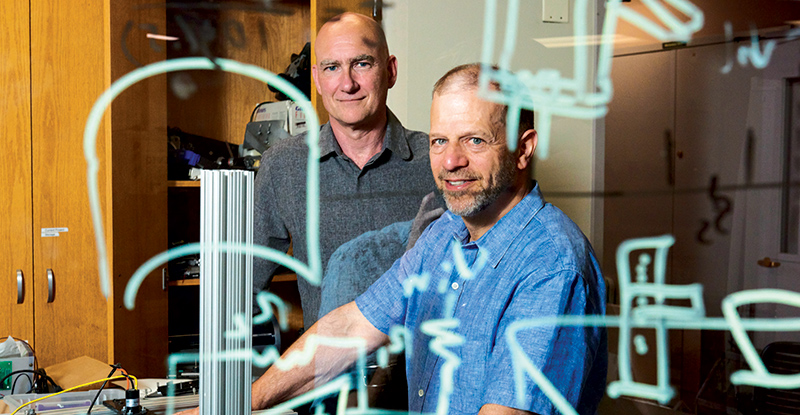

Collaborative robots have deep roots at the McCormick School of Engineering. In fact, Professors Michael Peshkin and Ed Colgate coined the term “cobots” in the mid-1990s.

Peshkin and Colgate joined their research labs in 1989, creating the Laboratory for Intelligent Mechanical Systems (LIMS). In 2012, as new faculty members joined and topics expanded to include neuromechanics and bio-inspired robotics, LIMS became the Neuroscience and Robotics Lab.

With faculty and research scope continuing to grow, the Center for Robotics and Biosystems launched this fall. Faculty have appointments in mechanical engineering, biomedical engineering, computer science, the Feinberg School of Medicine, and the Shirley Ryan AbilityLab.

Research topics include haptic (touch) interfaces, motion planning and control for autonomous robots, swarm robotics, exploration in uncertain environments, robot learning, bio-inspired robots, and the sensorimotor systems of animals.

Still, collaborative human-robot systems remains a key theme across the research of many of the faculty. “We envision a future where humans and robots work together seamlessly,” says Lynch, also the editor-in-chief of leading robotics academic journal IEEE Transactions on Robotics. “We design robots that augment human abilities.”

A Space for Human-Human and Human-Robot Collaboration

The new space—a wide-open collaboration area with smaller, specialized laboratories—includes a prototyping “makerspace” and an area called the “robot zoo,” a place to test the latest research on drones, wheeled and legged mobile robots, and robot arms and hands.

Undergraduates and PhD students from different departments work side-by-side with students in the popular Master of Science in Robotics program. This encourages the cross-pollination of ideas by students and faculty with different backgrounds, and accommodates visitors from the the center’s many collaborators.

The new space, says Lynch, chair of the mechanical engineering department, “will hopefully catalyze even more partnerships at Northwestern.” That in turn should expedite realization of the vision where humans and machines integrate seamlessly in both work and life.

A Progression of Partnerships

Northwestern Engineering’s legacy in robotics started in the 1950s when Dick Hartenberg, a professor, and Jacques Denavit, a PhD student, developed a way to represent mathematically how mechanisms move.

At the time, there was no agreement on how to describe the kinematics—the geometry of motion—of mechanisms consisting of links (rigid bodies, like bones) and joints (parts that allow motion between rigid bodies, like a shoulder joint). The duo showed that the position of one link connected to another by a joint could be represented minimally using only four numbers, or “parameters.” These came to be known as the DenavitHartenberg parameters, the standard description of the kinematics of robots for decades to come.

Thirty years later, when Michael Peshkin arrived at the McCormick School of Engineering as an assistant professor, the field of robotics was focused on trying to develop fully autonomous robots.

"We realized that autonomy for robots wasn’t the right goal. Instead, we could combine the strengths of people in intelligence, perception, and dexterity, with the strengths of robots in persistence, accuracy, and interface to computer systems."

Everyone thought robots would become more and more capable until they could walk among us, and step in for human workers,” says Peshkin, now professor of mechanical engineering. “Nobody had much of an idea how to make that happen, but the goal was always autonomy.”

In the early 1990s, a grant from the General Motors Foundation prompted Peshkin and collaborator Ed Colgate, now Breed Senior Professor of Design, to talk to GM assembly line workers. Though humans and robots shared the floor, they couldn’t work together physically, for safety reasons. Robotic systems had to remain behind a fence, creating a mindset that robot jobs and human jobs were completely distinct.

At the same time, the human workers were using machines to help move heavy parts for assembly, such as hoists. Peshkin and Colgate learned, however, that GM’s workers valued a certain level of independence. Workers got satisfaction from lifting parts with their own muscles, and the fast, smooth motion as they moved parts into place. This joy of free motion was lost when they had the assistance of machines.

“We realized that autonomy for robots wasn’t the right goal,” Peshkin says. “Instead, we could combine the strengths of people in intelligence, perception, and dexterity, with the strengths of robots in persistence, accuracy, and interface to computer systems.”

Inspired, the researchers designed and built cobots—a portmanteau of the term collaborative robots—that physically cooperate with workers to manipulate heavy items. The low-power robots helped guide the workers. When installing an automobile seat, for example, the worker initiated the motion and controlled the action while the cobot guided the seat to its exact position.

“It still gave workers the pleasure of motion that they wanted to retain,” Peshkin says. “The robot became a collaborator, not a replacement.”

Human Robot Teams Detect Danger

Combat soldiers trying to secure dense, urban areas often face dangerous levels of uncertainty. Will the next alley contain a potential threat, or just kids playing soccer? Northwestern Engineering’s Todd Murphey is developing a way to mitigate the danger— drones that can fly ahead of the troops, sense the environment, and report back what they see to the squad.

Murphey, professor of mechanical engineering, is finding ways to help humans interact and collaborate with autonomous systems. With funding from the Defense Advanced Research Projects Agency, he is developing algorithms and software that allow drones to perceive the environment, then determine which information should be transmitted to humans, and which should be ignored.

"This sort of feedback loop—modeling perception, making decisions, and then feeding that information back to a person— has implications in everything from manufacturing to driverless cars."

“Having some representation of what you’re about to encounter will lead to better decision making,” says Murphey, director of the Master of Science in Robotics program. He and his group are currently determining the best way for drones to relay information and are testing the system in virtual reality environments this fall.

“This sort of feedback loop—modeling perception, making decisions, and then feeding that information back to a person— has implications in everything from manufacturing to driverless cars,” Murphey says. “There’s definitely a role for autonomy to help keep people safer.”

Robots Inspired by Biology

Nearly all mammals use whiskers to explore and sense the environment. Rats, for example, use their whiskers to determine an object’s location, shape, and texture. Seals and sea lions use their whiskers to hunt fish.

"We take a bio-inspired approach, but we don’t just blindly mimic biology. To engineer the best system possible, we need to determine which specific features of the biology are most important."

Mitra Hartmann, Northwestern Engineering’s Charles Deering McCormick Professor of Teaching Excellence and professor of biomedical engineering and mechanical engineering, is leading an effort to develop robotic whiskers that can imitate these capabilities. The work could help develop robots that can perform touch-based sensing in dark, murky, or dusty environments (like pipes and tunnels), as well as robots that can sense the flow of air and water.

Each robotic whisker—a long, thin, plastic cone attached to an artificial follicle—can sense an object’s location and texture just as a rat’s whisker does. The team is currently constructing an entire array of whiskers for more precise measurements.

“We take a bio-inspired approach, but we don’t just blindly mimic biology,” Hartmann says. “To engineer the best system possible, we need to determine which specific features of the biology are most important.”

Humans Guided by Robots

Humans navigating the world in wheelchairs face obstacles in and out of the chair, but Northwestern Engineering’s Brenna Argall aims to help through autonomy.

"This system could help with functional tasks and help the body improve and gain strength. That’s the gold standard of rehabilitative robotics."

Argall, associate professor of computer science, mechanical engineering, and physical medicine and rehabilitation, is working to create partially autonomous wheelchairs that can help their users avoid obstacles, plan and navigate routes, and maneuver in tricky spaces. “We want to build a system that can pay attention and help with certain tasks,” Argall says.

She and her lab are currently working to characterize just how people drive their powered wheelchairs. They built a course in her lab at the Shirley Ryan AbilityLab—complete with doors, ramps, and sidewalks—and are developing datasets of how patients with spinal cord injuries, cerebral palsy, and ALS use different wheelchair interfaces.

“If we know how people operate these machines, we can design autonomy that knows how that operation happens and expects certain signals to create a more fluid symbiosis between human and machine,” Argall says.

She also works with Sandro Mussa-Ivaldi, professor of biomedical engineering, physiology, and physical medicine and rehabilitation, to investigate how a quadriplegic patient could use a system of sensors on their upper body to control a complex system, like a robotic arm. “This system could help with functional tasks and help the body improve and gain strength,” she says. “That’s the gold standard of rehabilitative robotics.”