Bringing Human-Machine Collaboration to Music Videos

New platform Pixie syncs AI-generated images to music

Andrew Paley is a computer scientist. As a PhD student at Northwestern Engineering, he works to democratize access to information through the power of artificial intelligence (AI) and machine learning.

Paley is also a musician. As the front man for Vermont-based The Static Age and as a solo artist, Paley has released multiple albums and performed at venues around the world, from Japan to Brazil to Europe and North America.

Paley has found a way to bring these distinct interests together. His new art generator platform, Pixie, is an AI system that leverages human-computer collaboration to produce unique visualizations for his music.

Developing Pixie

While Paley has devoted the last two years to the computer lab, he has been no stranger to the music studio, writing and recording his latest solo album, Scattered Light, concurrently with his PhD work.

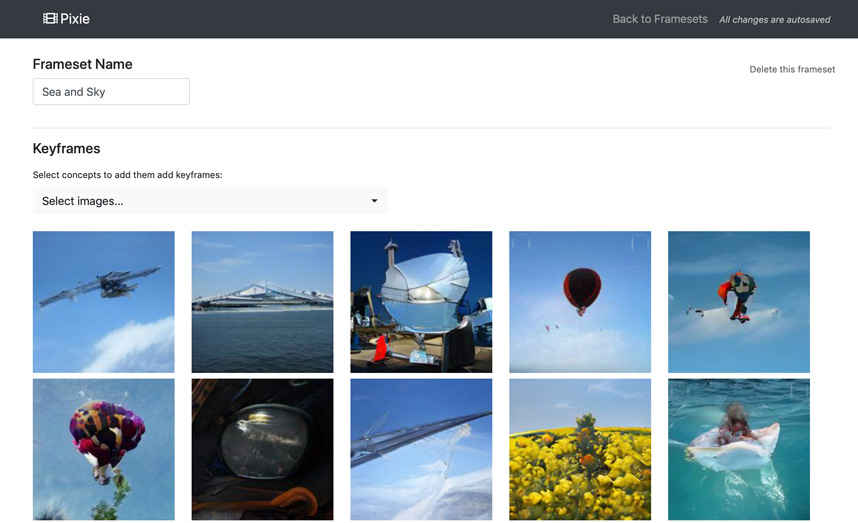

The combination of his dual pursuits fostered an interest in how human-machine collaboration can support creative endeavors. Interested in creating a visual accompaniment to his new music, Paley pitched the idea of Pixie, an AI platform that builds unique, blended images synced to music at the direction of the user, as an exploratory project in Professor Han Liu’s COMP_SCI 396: Statistical Machine Learning course. After receiving positive feedback, he went to work building it.

“It felt like an opportunity to take advantage of everything I was working on,” Paley said. “It was purely exploratory and separate from my core research, but it was a thread connecting my work in these two different parts of my life.”

“It felt like an opportunity to take advantage of everything I was working on,” Paley said. “It was purely exploratory and separate from my core research, but it was a thread connecting my work in these two different parts of my life.”

In designing Pixie, Paley leveraged BigGAN, developed by Google Deep Mind, a conditional AI model that creates realistic looking — but model-generated — images. Paley was inspired by the platform’s ability to repurpose images as a way of generating otherworldly visuals. Another platform, a collaborative flow model called ArtBreeder, generates synthetic images users can combine to create something totally new.

“With Artbreeder, a user is presented with a set of hybrid images — blends of a dozen or more types, from jellyfish to cocker spaniels to velvet — and gets to push the model in different directions by selecting a favorite or blending an additional image class to experiment with. The system will offer variations based on that direction,” Paley said. “The human is the director, but the model conjures up the visuals. That type of human-machine collaboration and discovery drove my curiosity.”

Pixie’s approach echoes Artbreeder’s concept of collaborative exploration, allowing Paley to tag model-generated images he likes for different portions of a song. Yet unlike other platforms, the system generates a script based on those tagged keyframes, an analysis of the song, and additional human-set preferences. It then creates animations synced to the music.

The results are hypnotizing. One of Paley’s videos, for his song “Give Up,” is generated entirely with Pixie’s architecture. Another, “Stay Safe,” experimentally melds Pixie functionality — song analysis, scene collections, and script generation — with aspects of Artbreeder. Both present lush, dream-like visuals that transition seamlessly from one form to another, all while perfectly timed to the music.

“It’s a process of collaboration and discovery — I tell Pixie what imagery I like and give it a song file, and then see what it dreams up,” Paley said. “You can get very granular with the decisions, or you can let it be more random. Each run of the engine produces a wholly new visualization. For the two songs released so far, I picked from at least 40 candidates.”

Finding his Northwestern direction

Paley arrived at Northwestern in 2010 after receiving a Knight Foundation scholarship to pursue a master’s degree in journalism. A coder since high school, Paley was drawn to the field’s opportunity to explore how humans understand the world around them. He also believed it was a domain where he could meaningfully apply his ability to build software.

It was at that time when Paley met Kris Hammond, Osborn Professor of Computer Science, and Larry Birnbaum, professor of computer science. The two had just launched their startup Narrative Science, a data-driven storytelling company and its flagship product, Quill, that uses AI to analyze large datasets and then presents them as accessible stories. Paley joined the company as a software engineer and went on to build and lead the design team. During his tenure, his work produced or contributed to more than a dozen patents. “It was the perfect merge of journalism and technology,” he said.

After seven years with the company, Paley sought to explore AI’s promise further. He joined Northwestern Engineering’s PhD program in computer science, with Hammond as his adviser.

“After focusing on one facet of AI for several years, I wanted to take a step back and get a bird’s eye view of the AI landscape and how it has changed so I could decide where to dig in next,” Paley said.

As a member of Hammond’s Cognition, Creativity, and Communication Research Lab, Paley explores the democratization of access to information through conversational systems and other forms of AI-empowered user experiences aimed at redefining the interface between humans and data.

His work includes design and co-development of the core platform behind the SCALES project, a collaborative endeavor between Northwestern faculty, which provides users with access to the information and insights hidden inside federal court records, regardless of their data and analytics skills. This work has led to the development of Satyrn, a dataset-agnostic “natural language notebook” interface for non-expert data exploration and analysis.

Paley also helped develop the COVID-19 Emergency Resource Exchange, a central hub that connected Illinois healthcare providers with valuable supplies during the early stages of the pandemic.

“We spent a lot of time during the era of Big Data making the world machine readable at scale, but most people can’t make sense of all that data without a certain set of complex skills,” Paley said. “We can’t reduce the complexity, but we can put more of the burden of managing it on the machine, so humans can just ask questions. That human-data interface is core to what we’re doing in the lab.”

What’s next

Paley is currently working on a Pixie-produced visual accompaniment to the entirety of Scattered Light, released on October 16. With touring sidelined until 2021 due to the COVID-19 pandemic, Paley continues to expand Pixie’s functionality, exploring new models and forms of generative animation for fun and for course credit within COMP_SCI 499: Independent Projects. He hopes to incorporate the platform’s visuals into his live shows once he can play in front of fans.

He said working at the intersection of his two interests feels natural.

“It’s been fun to work on something at the border between the two halves of my life,” Paley said. “It’s a reminder that the underpinnings of these two halves are not all that different — it’s all a creative process.”