AI Algorithm Unblurs the Cosmos

Tool produces faster, more realistic images than current methods

The cosmos would look a lot better if Earth’s atmosphere wasn’t photo bombing it all the time.

Even images obtained by the world’s best ground-based telescopes are blurry due to the atmosphere’s shifting pockets of air. While seemingly harmless, this blur obscures the shapes of objects in astronomical images, sometimes leading to error-filled physical measurements that are essential for understanding the nature of our universe.

Now researchers at Northwestern University and Tsinghua University in Beijing have unveiled a new strategy to fix this issue. The team adapted a well-known computer-vision algorithm used for sharpening photos and, for the first time, applied it to astronomical images from ground-based telescopes. The researchers also trained the artificial intelligence (AI) algorithm on data simulated to match the Vera C. Rubin Observatory’s imaging parameters, so, when the observatory opens next year, the tool will be instantly compatible.

While astrophysicists already use technologies to remove blur, the adapted AI-driven algorithm works faster and produces more realistic images than current technologies. The resulting images are blur-free and truer to life. They also are beautiful — although that’s not the technology’s purpose.

“Photography’s goal is often to get a pretty, nice-looking image,” said Northwestern Engineering’s Emma Alexander, the study’s senior author. “But astronomical images are used for science. By cleaning up images in the right way, we can get more accurate data. The algorithm removes the atmosphere computationally, enabling physicists to obtain better scientific measurements. At the end of the day, the images do look better as well.”

The algorithm removes the atmosphere computationally, enabling physicists to obtain better scientific measurements. At the end of the day, the images do look better as well.

Emma AlexanderAssistant Professor of Computer Science

The research was published March 30 in the Monthly Notices of the Royal Astronomical Society.

Alexander is an assistant professor of computer science at the McCormick School of Engineering, where she runs the Bio Inspired Vision Lab. She co-led the new study with Tianao Li, an undergraduate in electrical engineering at Tsinghua University and a research intern in Alexander’s lab.

When light emanates from distant stars, planets, and galaxies, it travels through Earth’s atmosphere before it hits our eyes. Not only does our atmosphere block out certain wavelengths of light, it also distorts the light that reaches Earth. Even clear night skies still contain moving air that affects light passing through it. That’s why stars twinkle and why the best ground-based telescopes are located at high altitudes where the atmosphere is thinnest.

“It’s a bit like looking up from the bottom of a swimming pool,” Alexander said. “The water pushes light around and distorts it. The atmosphere is, of course, much less dense, but it’s a similar concept.”

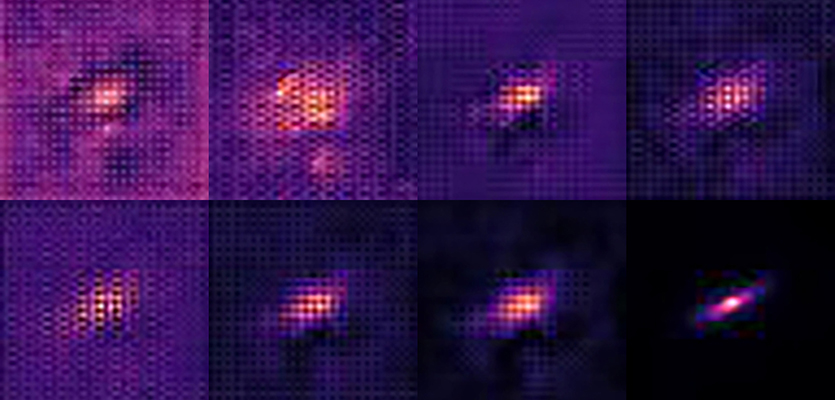

The blur becomes an issue when astrophysicists analyze images to extract cosmological data. By studying the apparent shapes of galaxies, scientists can detect the gravitational effects of large-scale cosmological structures, which bend light on its way to our planet. This can cause an elliptical galaxy to appear rounder or more stretched than it really is. But atmospheric blur smears the image in a way that warps the galaxy shape. Removing the blur enables scientists to collect accurate shape data.

“Slight differences in shape can tell us about gravity in the universe,” Alexander said. “These differences are already difficult to detect. If you look at an image from a ground-based telescope, a shape might be warped. It’s hard to know if that’s because of a gravitational effect or the atmosphere.”

To tackle this challenge, Alexander and Li combined an optimization algorithm with a deep-learning network trained on astronomical images. Among the training images, the team included simulated data that matches the Rubin Observatory’s expected imaging parameters. The resulting tool produced images with 38.6 percent less error compared to classic methods for removing blur and 7.4 percent less error compared to modern methods.

When the Rubin Observatory officially opens next year, its telescopes will begin a decade-long deep survey across an enormous portion of the night sky. Because the researchers trained the new tool on data specifically designed to simulate Rubin’s upcoming images, it will be able to help analyze the survey’s highly anticipated data.

For astronomers interested in using the tool, the open-source, user-friendly code, and accompanying tutorials are available online.

“Now we pass off this tool, putting it into the hands of astronomy experts,” Alexander said. “We think this could be a valuable resource for sky surveys to obtain the most realistic data possible.”

The study, “Galaxy Image Deconvolution for Weak Gravitational Lensing with Unrolled Plug-And-Play ADMM,” used computational resources from the Computational Photography Lab at Northwestern University.