Detecting Letters Written in the Air

Sonia Lai (MSR '21) discusses the lessons she learned doing her independent project for Northwestern Engineering's Master of Science in Robotics (MSR) program and how that knowledge is influencing her final project in the program.

Sonia Lai has been intrigued by computer vision for as long as she can remember, so when it came time to choose her independent project during winter quarter in Northwestern Engineering's Master of Science in Robotics (MSR) program, she knew where she wanted to focus.

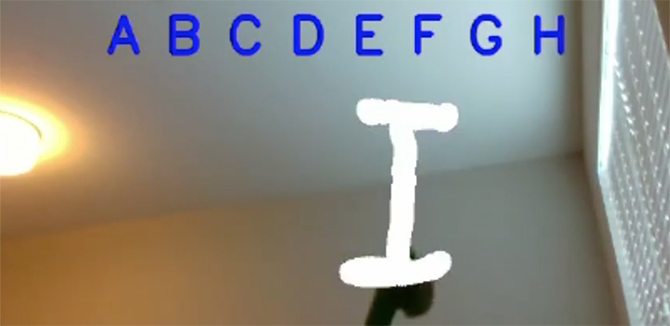

Lai (MSR '21) set out to use computer vision and machine learning to classify letters written in the air in front of a camera. The goal was to use a model to identify the letters and a pipeline to track a pen's trajectory so it would appear the letter was being written on the screen. She also wanted to stream the processed frames onto a virtual camera so the letters could be displayed through teleconferencing systems. Instead of needing to use an external tablet or projector, one could simply use the pen to conveniently display written letters on a different user's screen.

The independent project is one of three project requirements for MSR students, and it prepares them to work on their larger final project. Both give students the chance to choose the area of robotics they want to focus on. The third requirement is an online portfolio, where student work is housed.

Lai took Machine Learning at the same time as her independent project, and there were times where she wanted to understand information for her project that was going to be covered later in the quarter in class, such as working with a neural network. She learned these skills outside of the classroom environment as the need for them arose, which is partially the point of the independent project requirement.

"I needed to familiarize myself with building a robust model and data loader for the written letters using PyTorch [an open-source machine learning tool]," she said. "Thanks to online materials, I was able to start working on computer vision and integration with a prototype-like pre-trained model and improve this model at the end after I had more knowledge of Convolution Neural Networks."

The knowledge Lai acquired earlier in MSR helped her refine her learning path. She was able to use Intel®RealSense™ Python documentation to get started with creating a pipeline using OpenCV on a depth camera. She also received ongoing advice and support about processing frame quality and speed to improve the performance of the project from Professor Matthew Elwin, who oversees all independent projects.

"This project helped me learn OpenCV and PyTorch," Lai said, "but more importantly, it helped me understand how to work independently."

If she were to do the project again, Lai said she would consider changing her approach to evaluating letters. Instead of using a dataset that classified a complete letter as an image, which is what she did, she'd try to use the strokes of the pen to understand the letter being written.

Lai is currently working on her final project, which also involves machine learning, so the PyTorch knowledge has been beneficial. She's confident that education and the opportunity to focus on a project of her choosing will definitely aid her in her career moving forward.

"This project allowed me to see what might be expected in a professional research and design environment," she said. "When I face similar challenges in the future, this experience will be a strong foundation to help me analyze and resolve those challenges."