Applying AI Techniques in Cybersecurity, Counterterrorism, and International Security

Day-long conference highlighted research projects in new Northwestern Security and AI Lab

From predicting terrorist attacks to destabilizing terrorist networks to predicting, detecting, and mitigating cyber-attacks in real time, artificial intelligence (AI) has shown potential as a valuable tool to protect against nefarious actors around the world.

A newly launched Northwestern lab will help lead in developing and deploying AI technologies that serve as solutions to these global threats.

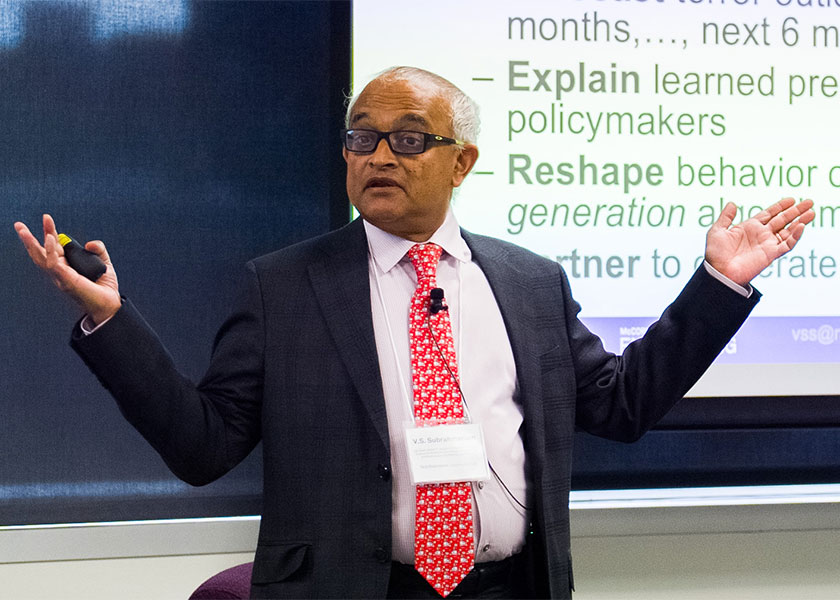

Led by V.S. Subrahmanian, Walter P. Murphy Professor of Computer Science in Northwestern Engineering and a faculty fellow at the Northwestern Roberta Buffett Institute for Global Affairs, the new Northwestern Security and AI Lab (NSAIL) is conducting fundamental research in AI relevant to issues of cybersecurity, counterterrorism, and international security.

On October 20, the Buffett Institute and the McCormick School of Engineering hosted the “Conference on AI and National Security” to mark the lab’s launch. During the day-long event, Subrahmanian and his collaborators presented several research projects that aim to address challenges at the ever-evolving intersection of AI and security.

“Posing the right questions when something is murky is probably more important than finding answers,” said Northwestern Engineering Dean Julio M. Ottino during the event’s opening remarks. “There are no big prizes for solving correctly what turns out to be the wrong question. If the right questions get asked, everything will follow.”

Below are NSAIL projects showcased at the event.

NTEWS: Forecasting malicious activity

A guiding goal for NSAIL is to develop predictive models that can analyze specific terrorist group activity and forecast future attacks. With the Northwestern Terror Early Warning System (NTEWS) project, NSAIL researchers are creating a platform that can predict terrorist behaviors well in advance of any attacks that they might carry out, allowing officials to plan for those attacks and mitigate the impact.

Using the known environments in which terrorist groups operate, Subrahmanian and his team can reduce the information about targeted groups into a relational database representing the different types of attacks a group might carry out and a set of independent variables monitored by NSAIL that may aid in predicting those attacks within a given timeframe.

A critical component of the NTEWS prediction modeling is the accessibility of its findings to officials.

“The very heart of our work, right from the very beginning, has been this idea that we must be able to come up with predictions that we can explain to policymakers, preferably in an elevator-style pitch,” Subrahmanian said.

The NTEWS project team — which also includes Priyanka Amin, a third-year undergraduate student in computer science in Northwestern’s Weinberg College of Arts and Sciences, Chongyang Gao, PhD candidate in computer science, NSAIL senior research associate Chiara Pulice, and consultant Aaron Mannes — is attempting to predict both when attacks likely will and will not happen so resources can be allocated appropriately.

Using unclassified and open-source data about terrorist groups gathered and shared in collaboration with external partners, the NTEWS system will generate predictions and guidance on interpretation. NSAIL will then share the forecasts and outcomes in publicly available venues such as websites or scientific papers in accordance with its core operating principles.

PLATO: Predicting terrorist network lethality

Past work on a system called Shaping Terrorist Organization Network Efficacy (STONE) studies how to minimize the number of attacks that a terrorist network will carry out. However, this requires the ability to predict the number of attacks that a specific network structure will carry out. The Predicting Lethality Analysis of Terrorist Organization (PLATO) model predicts the number of attacks a terror group will carry out based solely on its network structure.

“We want to understand the link between the network structure and the lethality, and with PLATO, we are capable of doing so,” Pulice said. “By using machine learning and regressor models, we understand clearly which network features are the most important, and thus features we can use as good predictors for lethality.”

“We want to understand the link between the network structure and the lethality, and with PLATO, we are capable of doing so,” Pulice said. “By using machine learning and regressor models, we understand clearly which network features are the most important, and thus features we can use as good predictors for lethality.”

Merging machine learning with techniques from graph theory and social network analysis, PLATO algorithms were tested on datasets detailing the relationships between members of Al-Qaeda and the Islamic State (ISIS).

The PLATO team currently includes Gao, Francesco Parisi (University of Calabria), Pulice, and Subrahmanian.

“What we found for both Al Qaeda and ISIS is that the operational sub-networks and the leadership of networks are strictly connected to the lethality,” Pulice said. “If we operate on one of those two sub-networks, intuitively we affect the lethality.”

NCEWS: Managing AI and cybersecurity vulnerabilities

NSAIL also focuses on issues concerning information, cyber, and technology security, including managing vulnerabilities in an enterprise, detecting malware and estimating its spread, managing cyber alerts, and preventing intellectual property (IP) theft.

The lab is developing a decision model system called the Northwestern Cyber Early Warning System (NCEWS) to manage two types of cyber-attacks — known vulnerabilities, which are typically assigned a common vulnerability and exposure (CVE) number, and zero-day vulnerabilities, which are flaws in a system or device discovered by hackers and not yet known to the vendor. Approximately 20,000 CVEs are disclosed annually.

“What we want to be able to do is to look at a CVE and ask ‘Is this ever going to be used in an attack?’” Subrahmanian said. “If not, you probably don’t need to worry about it as much. We want to be able to take information about a new CVE and predict if this vulnerability is going to be used in an attack and, if so, is it going to be used in an attack tomorrow or a month from tomorrow. That impacts the decision you make about what you do with that vulnerability. And, of course, you want to be able to predict how severe the attack will be.”

The sooner NCEWS can make these predictions, the more lead time an organization has to take appropriate action.

Subrahmanian found, on average, it takes approximately 133 days between when a vulnerability first becomes known to when National Institute of Standards and Technology (NIST) distributes information about its risks. Further, he found that 49 percent of vulnerabilities are used in attacks before NIST updates the national vulnerability database.

“If I’m a bad guy, I just watch what’s happening. I don’t have to discover my own vulnerabilities,” Subrahmanian said. “I wait for someone else to discover it, and then I can build an exploit.”

Building and improving on a previous system Subramanian developed, NCEWS uses an ensemble of predictors and a combination of natural language methods and social network analysis to mine ongoing discussions about a given vulnerability.

“We’re able to generate very high F1 scores for whether a vulnerability will be exploited or not and reasonably well in terms of when and how severe,” Subrahmanian said.

By adapting a human health model for cybersecurity, NSAIL also developed a model called DIPS — detected, infected, susceptible, and patched — to predict how badly a network is likely to be affected by a new piece of malware. The team examined false alarm rates to determine what percentage of alarms raised by security products are of real concern, whether the lab can predict which alerts are true, and what percentage of true alarms will be missed.

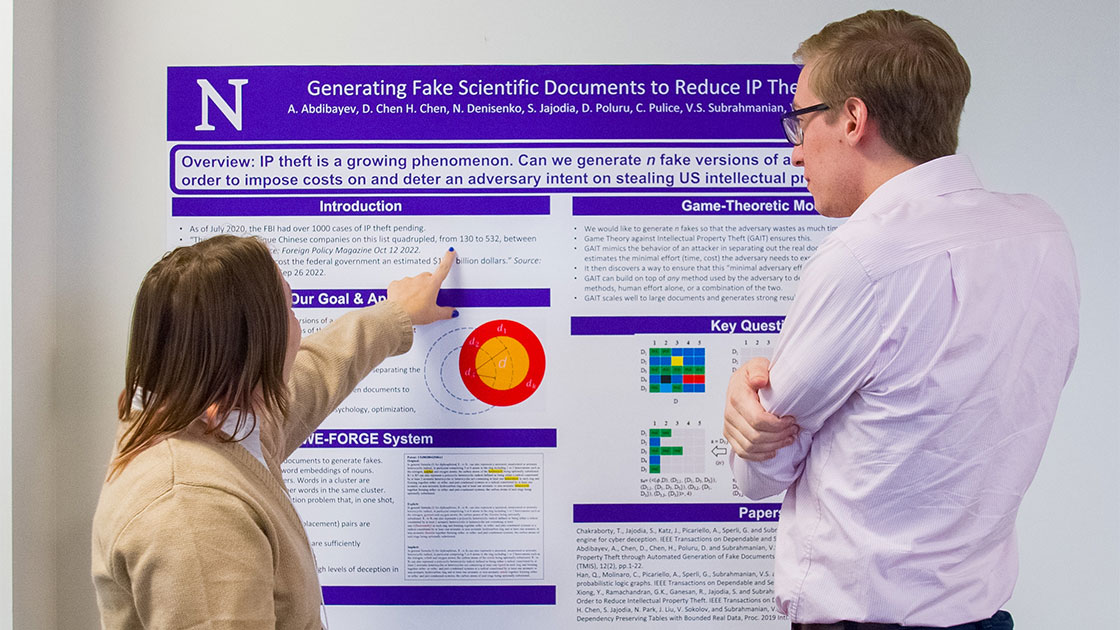

FORGE: Imposing costs on intellectual property thieves

To address the growing challenge of intellectual property theft, NSAIL is developing projects such as the Fake Online Repository Generation Engine (FORGE) and WE-FORGE to generate fake versions of sensitive documents with the aim of imposing costs on an attacker.

“We want to buy time for the defender and frustrate and delay the adversary,” Subrahmanian said. “If an adversary does not know that the network has fakes, then he’s going to spend a lot of time stealing documents and executing his design on the wrong one. If the adversary does know that the organization uses fakes, then he has to spend a lot more time in the system, which would hopefully raise a red flag.”

SockDef: Mitigating review fraud

Subrahmanian’s team at Northwestern and collaborators at the University of Calabria in Italy recently won the Best Paper Award at the 20th IEEE International Conference on Dependable, Autonomic & Secure Computing for their paper on e-commerce review fraud titled “A New Dynamically Changing Attack on Review Fraud Systems and a Dynamically Changing Ensemble Defense.”

Malicious actors operate so-called “sock farms” — small armies of accounts focused on pushing a fake opinion about a product. Malicious actors can use a reinforcement-learning-based strategy called SockAttack to target review platforms and evade static ML classification-based detectors. The research team proposed a novel defense called SockDef that can be built on top of one or more existing review fraud detectors to mitigate the harmful impact.

Considering future concerns

While AI clearly plays a major role in cyber defense, Subrahmanian outlined his concerns regarding how hackers will use AI to learn how defenses are being used and predict whether a planned attack will be detected by a defender.

He also discussed the potential for hackers to use AI to generate previously unknown forms of malware, attack graphs, and deepfake-enabled phishing vectors, and to combine these techniques with emerging cyber-physical systems such as drones or biological agents.

Subrahmanian also identified potential future roles for AI techniques in detecting phishing messages and posts, identifying infected URLs, and identifying adversary behavior.

“These are going to get increasingly challenging,” Subrahmanian said. “Imagine receiving a deepfake video of your kid trained by an adversary who can gather open-source information. We don't yet know how to generate fake videos in real time, but I think it's only a matter of time before some of that happens.”