Advancing AI Systems in Cybersecurity, Counterterrorism, and International Security

Day-long conference highlighted research projects in the Northwestern Security and AI Lab

Artificial intelligence (AI) models trained on unclassified, open-source data can predict terrorist attacks, aid in destabilizing terrorist networks, protect against intellectual property theft, and predict, detect, and mitigate cyber-attacks in real time.

The Northwestern Security and AI Lab (NSAIL) team is one of the leaders of a growing multidisciplinary community developing and deploying AI technologies to address these global threats and protect against malicious actors around the world.

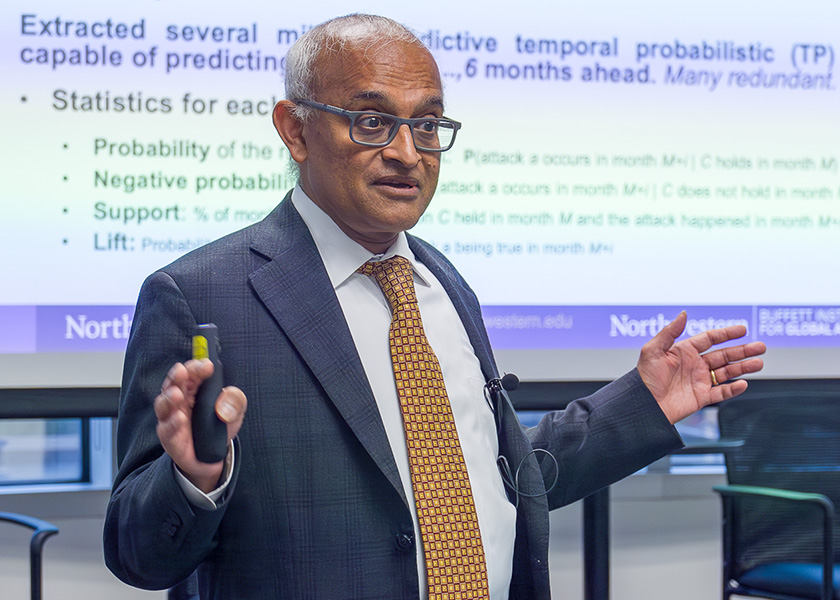

Led by V.S. Subrahmanian, Walter P. Murphy Professor of Computer Science at Northwestern Engineering and a faculty fellow at the Northwestern Roberta Buffett Institute for Global Affairs, NSAIL is conducting fundamental research in AI relevant to issues of cybersecurity, counterterrorism, and international security.

On October 12, the Buffett Institute and the McCormick School of Engineering hosted the “Conference on AI and National Security” to showcase NSAIL’s work. Building on work featured during the inaugural conference last year, the event featured research demonstrations, presentations, and panel discussions with leading experts in AI, cybersecurity, and national security. Approximately 260 users participated, either in person or online.

B.Hack: Predicting targeted attacks

A foundational goal for NSAIL is to develop predictive models that can analyze specific terrorist group activity and forecast future attacks.

“It's really, really important to study your opponent,” Subrahmanian said. “You cannot mount a good defense against any kind of attack — regardless of whether it's terrorist attack, a war, or a cyber attack — unless you understand your adversary.”

Building on NSAIL’s Northwestern Terror Early Warning System (NTEWS) project — a machine learning framework that generates forecasts about future attacks and predicts terrorist behaviors — Subrahmanian and computer science PhD student Lirika Sola launched the Boko Haram Analytics Against Child Kidnapping (B.HACK) project to provide more granular risk assessments of Nigerian schools targeted by Boko Haram.

One of the most dangerous terrorist groups in the world, Boko Haram has abducted thousands of children from Nigerian schools and displaced millions of people from their homes since its emergence in 2002, according to BBC News.

Building on top of a specialized dataset which leverages resources including the Armed Conflict Location & Event Data Project, B.HACK is an AI-enabled system that assigns a Boko Haram kidnapping risk score to every school in Nigeria. The platform enables a user to examine schools within a selected region of interest and review the probabilities of each school being attacked based on factors including the five nearest security installations and the number of prior Boko Haram attacks within a specific radius (zero to 50 miles) of each school.

Subrahmanian noted that the B.HACK platform’s risk analysis techniques can apply to predictions of different types of targeted attacks.

“We have a methodology where we can predict other related phenomena, like which security installations will be targeted or which tourist or transportation sites might be targeted,” Subrahmanian said. “This is the first of a long series of spatial predictions we hope to be able to make in the coming years.”

PCORE: Forecasting malicious activity caused by climate change

As climate change dramatically alters the locations of water and vegetation sites in Africa, pastoralists are forced to adapt their movement patterns to sustain their herds. The competition over resources and disputes over land rights are increasing the number of violent conflicts as herders encroach on subsistence farmland or the traditional territory of other herders.

In joint work with the United Nations Department of Political and Peacebuilding Affairs, NSAIL’s Pastoral Conflict Reasoning Engine (PCORE) project generates a map which identifies the locations within five countries — Burundi, Cameroon, Central African Republic, Chad, and the Democratic Republic of the Congo — at risk of pastoral conflicts.

After breaking each country into cells corresponding to specific regions within each of the five countries, the team gathered data on the history of conflict within a given cell as well as weather data and ground data related to variables including terrain, land-use, and roads.

The machine learning models developed by the PCORE team apply several different algorithms to predict whether conflict will occur. Subrahmanian presented an example of a risk assessment in the Central African Republic using a decision-tree algorithm.

“If a cell had over 5.5 conflicts in the past, its relative humidity at two meters is less than or equal to 5.9 percent, and the surface soil wetness is less than or equal to 27.4 percent, then there is a 100 percent probability that such a cell will experience conflict,” Subrahmanian said. “Ten cells validate these rules, and in every one of those 10 cases, there was a conflict.”

Imposing costs on hackers

NSAIL also focuses on issues concerning information, cyber, and technology security, including managing vulnerabilities in an enterprise, managing cyber alerts, and preventing intellectual property (IP) theft.

Cybersecurity project teams are addressing malware, which causes significant harm to individuals and enterprises by stealing sensitive data, disrupting business operations, damaging systems, and exposing confidential information.

“We always want to put ourselves in the shoes of the bad guy and say, ‘if I build a system, how would the bad guy attack it?’” Subrahmanian said. “We put ourselves in the shoes of our adversaries to try to get in front of it and craft defenses.”

Subrahmanian is a coauthor of a new book — with Qian Han and Sai Deep Tetali (Meta), Salvador Mandujano and Sebastian Porst (Google), and Yanhai Xiong (William & Mary) — called The Android Malware Handbook: Detection and Analysis by Human and Machine (No Starch Press Inc., 2023) that introduces the Android threat landscape and presents practical guidance to detect and analyze malware.

The team found that features related to app permissions are linked to whether an app is malware or not. They also proposed novel features based on an analysis of the behaviors of the app.

“Watch those permissions,” Subrahmanian said. “Always check and see whether the app's permissions are consistent with how you intend to use the app and what the app does.”

Predictive analysis of NATO Locked Shields exercises

In another effort to protect computer systems from real-time attacks, an NSAIL team conducted joint work with the Netherlands Defence Academy and the Technical University of Delft to detect suspicious network sessions in the NATO Locked Shields exercise.

An annual cyber defense exercise organized by NATO Cooperative Cyber Defence Centre of Excellence, Locked Shields is a forum for thousands of cyber security experts across more than 30 countries to enhance their skills in defending national IT systems and critical infrastructure against malicious attacks.

“It is not only important to correctly predict which sessions are malicious, but also to make these predictions as early as possible,” said Chiara Pulice, an NSAIL senior research associate who has played an important role in this effort.

“Novel predictive models produced by the research team have the potential to have a transformative impact on how organizations can monitor their suspicious activity on their enterprise networks,” said research collaborator Roy Lindelauf, head of the Data Science Center of Excellence at the Netherlands Ministry of Defence.

Deepfakes and International Conflict

While malicious actors increasingly use deepfake technology to do harm, NSAIL researchers are at the leading edge of the development of AI systems to generate realistic deepfake videos to cause dissension within terror groups.

Daniel W. Linna Jr. moderated the concluding panel of the Conference on AI and National Security, which focused on the intersection of international conflict and deepfake technology.

Linna is a senior lecturer and director of law and technology initiatives at Northwestern, who has a joint appointment at Northwestern Engineering and the Northwestern University Pritzker School of Law. Panelists included Larry Birnbaum, professor of computer science at Northwestern Engineering, Dan Byman (Georgetown University), and retired Lieutenant General John N.T. Shanahan (former director, US Department of Defense Joint Artificial Intelligence Center).

The group discussed a range of issues, including how deepfakes are being used to support undemocratic geopolitical events and to compromise elections; the ethical implications of government agencies employing deepfakes; how deepfakes fit within the suite of military information operations; and the state, federal, and international regulation of these and other AI tools.

Birnbaum noted that deepfake technology is readily available and will continue to improve in quality.

“Some of this technology is under the control of organizations that are reasonably reliable,” Birnbaum said. “But that's not going to last very long. We have to assume that this will be relatively quickly a ubiquitous and easy-to-use technology.”

Birnbaum also explained that the automation of deepfake technology will allow for increased personalization.

“Misinformation and disinformation are already flooding the zone. And deepfakes will add to that pain,” Birnbaum said. “Marketers do this all the time — they segment markets, and they tailor messages to particular markets. When you automate, you can make that market smaller and smaller — eventually, maybe a market of one. When the disinformation or misinformation can be targeted to very small units, it can potentially be made much more appealing and more believable, because it matches so precisely what a particular person might want to think.”