Good Old-Fashioned Artificial Intelligence

In the era of Deep Learning, we often come across Symbolic AI and Non-Symbolic AI. What exactly is Symbolic AI? To start, Symbolic AI (or Classical AI) is the branch of Artificial Intelligence that concerns itself with attempting to represent knowledge in an explicitly declarative form.

An Efficient Medium - Symbolic AI

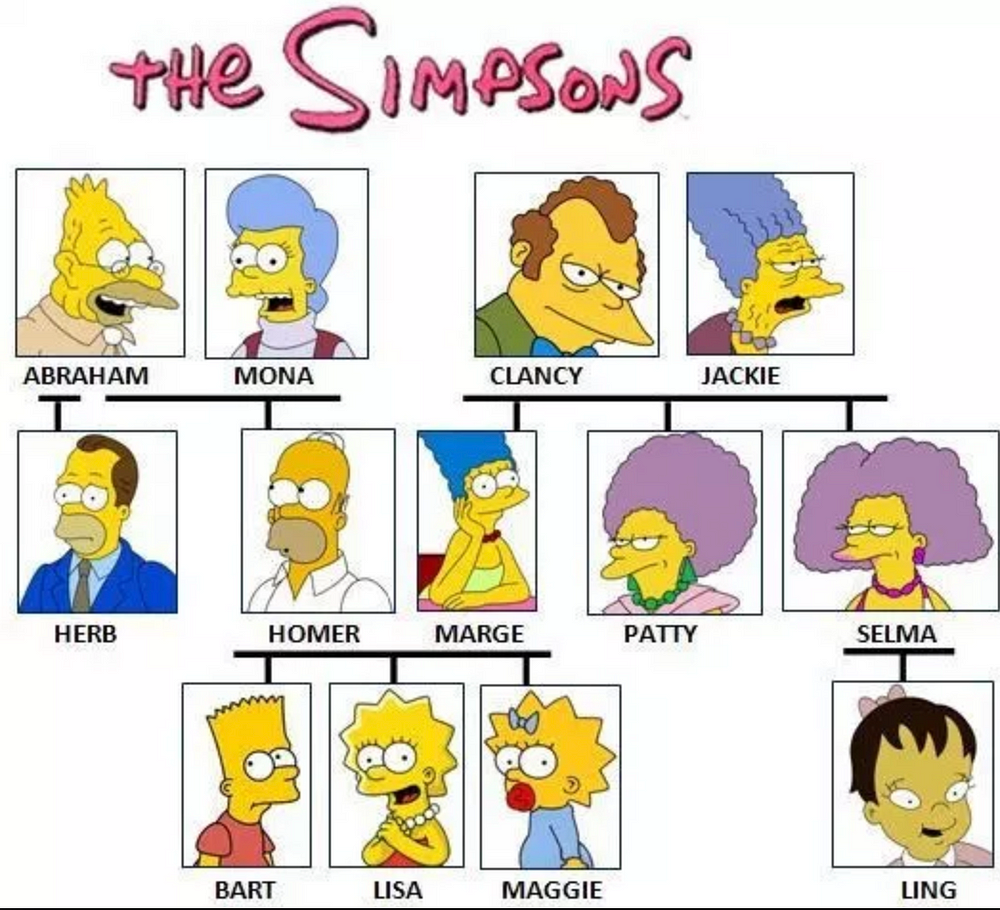

This medium is a framework as an approach to Artificial Intelligence, where knowledge is described in terms of ‘constants’ and ‘predicates.’ The constants are simply objects in a world, and the predicates are relationships between the objects. An excellent example of Symbolic AI is a family tree. Look at the figure below. By using deductive reasoning on an initial list of relations, we can infer new relationships between objects ( Eg: Herb is the half-brother of Homer)

Interpretable representation is a huge benefit to using Symbolic AI. The algorithms generalize conceptual aspects at a higher level through reasoning and traceability of results. This is a big deal! When dealing with real-world data you might want to have an explanation of why your algorithm gave you a certain decision. So, in the case of Symbolic AI, the framework gives you and the program building blocks (in the form of constants, symbols, relations), which let you understand decisions it takes.

A Major Stumbling Block of Symbolic AI

Simply due to the nature of this approach, there’s a big pitfall that comes up when you try and implement it in AI systems. Basically, knowledge needs to be inherited manually for this program to work. I’ve just used a fair amount of jargon, so let’s go back to the previous example of a family tree. The relations here can be organized and identified with ease, largely because you — the reader — can read, and lines are drawn to establish clear connections from one object to the next. But what about a significantly more complex alternative?

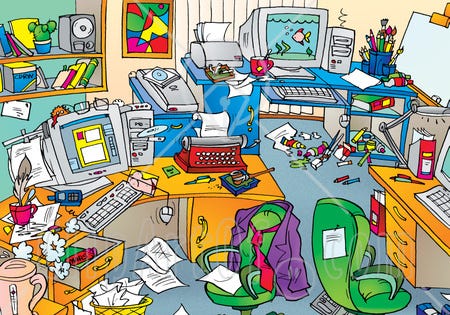

This picture is a mess, so it would be quite difficult for an algorithm to identify and classify the objects. Heck, it’s even hard for a human to really understand what on earth is going on here. You — because your brain is specifically designed to take in large amounts of seemingly innocuous data and establish patterns and connections through both short- and long-term memory — are probably able to put together that this picture is of an office. A computer doesn’t have that luxury. In order to establish the connections between all of the disparate objects here, a programmer would need to hard-code all of that seemingly implicit meaning into the system to get the computer to understand what it’s looking at is actually an office. This can get tedious real fast, so researchers try and have algorithms which make the work easier. A concept called neural networks is integral to this idea. Neural networks are a complicated concept, so I’ll just give you a quick insight into how they work.

How Neural Networks can make programmers’ lives easier

Systems such as neural networks are implemented using implicit knowledge to perform optimization. An example of neural networks in action would be in image recognition. In the above example, a computer would use a non-linear mapping that uses pixels to analyze what’s going on. But instead of hard-coding all of the implicit knowledge that humans generate automatically when they see a picture like the one above, a programmer would instead use a ‘black box’ (named because the programmer doesn’t have a good understanding of what exactly is going on inside their program), which has parameters that we have the computer do a lot of complicated math to fine tune to find patterns throughout the objects. As if a refutation to the belief that the universe is chaotic and free-wheeling, computer scientists have found that doing the complicated math on millions and even billions of imperceptible parameters actually find enough patterns to actually allow the computer to come to a result. Through this method, the computer can eventually identify the objects in question.

This sounds a bit like magic — and can have a few downsides because of that. Neural networks aren’t really interpretable. “What’s the big deal?” you say, thinking of how convenient it would be to have an image recognition system identify and classify images through black-box methods. But that’s not the only application of inexplicit AI. There are plenty of other tedious problems that computer scientists would love to apply this ‘magic’ to — like the programs that run self-driving cars, or advanced medical analytics. These models generalize at an incredibly high conceptual level — which we can’t really understand. This becomes a big issue when you need to be able to tell the people whose lives depend on your programs why your program made a certain decision.

As you can see, this isn’t ideal. Ironically, the idea of non-symbolic AI originated from scientists trying to emulate a human brain and its system of interconnected neurons but ended up creating something even more complex and uninterpretable. So, what’s the answer? Tedious, handcrafted symbolic AI systems that require programming talent and patience we don’t have? Or ‘magic’ that programmers themselves can’t really explain?

Thoughts on a better way to gain quality results

Benefits and downsides of symbolic and non-symbolic learning are surprisingly complimentary. A combination of the two — often known as the Hybrid Approach (or reinforcement learning) can often compensate for the downsides of both. Their strengths and weaknesses offset each other and have been found to be incredibly successful in perceptual tasks. Explaining how hybrid AI works will take another paper — but for now, think of reinforcement learning as a team of computers and humans working together to achieve a better and more flexible approach. Sounds intriguing, right?

In recent years, the margin is reducing between symbolic and non-symbolic models. This future hybrid paradigm would undoubtedly help us overcome the data intensity of neural networks and algorithms that are much better at reasoning.

Interpretation

In recent years, the gap is declining between symbolic and non-symbolic models. A future hybrid paradigm would undoubtedly help us overcome the data intensity of neural networks and algorithms that are much better at reasoning, while also maintaining our ability to understand what’s going on under the hood.