Teaching Policy With AI and Video Games

An AI and Education discussion hosted by MSAI examines two examples of video games that help users learn about moral and causal reasoning.

Research has shown that artificial intelligence (AI) can become a valuable asset for teaching quantitative topics, like algebra or statistics. But what happens when you use AI to teach individuals about policy argumentation on topics like climate change or income inequality?

Matt Easterday, an assistant professor in Northwestern University's School of Education and Social Policy, posed that question as part of a recent presentation on AI and Education hosted by Northwestern Engineering's Master of Science in Artificial Intelligence (MSAI) program. The answer, as he outlined, is complicated.

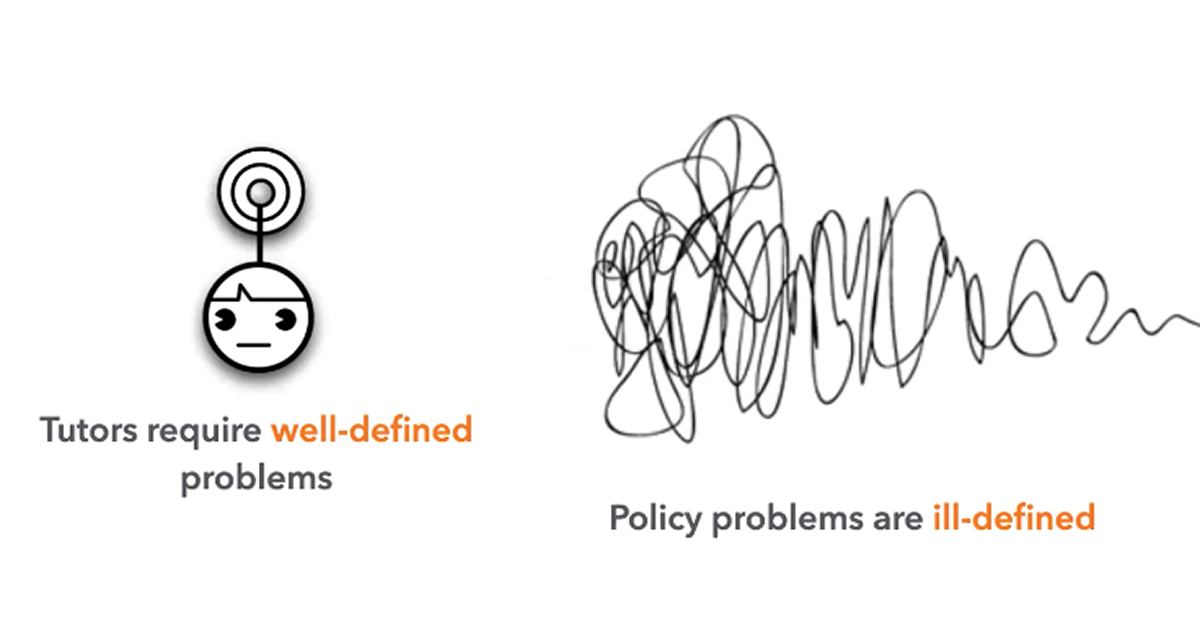

When it comes to education, AI is often incorporated using intelligent tutoring systems. These intelligent tutors observe how students solve a problem and give feedback on each step of the problem-solving process. That works with formulaic equations, but it becomes more challenging with abstract topics like money in politics.

"If we want to use AI to help with that, we run into a problem very quickly," Easterday said. "Most of our intelligent tutoring systems require very well-defined problems — things where we can literally give the computer an algorithm and it can solve it in order to build these tutoring systems."

Policy problems are ill defined, so the challenge becomes figuring out how to present them in a clear way so that intelligent tutors can be incorporated. On top of that, teaching these problems has to be done in an interesting way in order to keep people engaged.

Easterday's solution? Video games.

Easterday presented two examples of cognitive games for policy argumentation that he helped develop through the Delta Lab, the first interdisciplinary research and design studio at Northwestern. The first example was Policy World, a choose-your-own-adventure style game that puts the user in the role of a lawyer hired by a think tank.

Each level features players working to advance a new policy. The first level begins with an analysis phase, where players search through articles in a fake Google interface. Once pertinent support for their policy is found, players are asked to find the causal claims in the article. From there, they identify what kind of evidence is behind the causal claim, create a causal diagram, and synthesize evidence across all the causal claims they find.

Because the articles and content used for support are coded into the game, the intelligent tutor knows exactly where the causal claims are located and how they link together, which means it can help users who struggle. "What looks like a very open-ended problem to the student is a very well-defined problem to the expert system on the back end," Easterday said.

After analysis, users are asked to make a policy recommendation to a senator, during which they identify how their interventions will affect the intended outcome. When the corporate lobbyist argues against them, users have to provide evidence from the articles to support their claim.

If users win the debate, their policy is adopted and they move on to the next level. Empirical work shows that Policy World was able to help users develop their skills of policy argumentation.

While Policy World focuses on causal reasoning, Political Agenda uses AI to teach moral reasoning, critical for policy debates.

"A lot of our political debates are not actually about evidence and mechanism, it's really about whether a particular policy aligns with your ideological values," Easterday said. "It turns out we're really bad at understanding people's ideological values if they're different from our own."

In Political Agenda, the user is an assistant to a talk show host. The host routinely has guests with distinct political viewpoints on the show, and he wants to boost the show's ratings by getting these guests riled up by asking obnoxious questions. However, he needs help understanding what questions will rile up each guest.

For example, the host asks users what type of response a Libertarian guest will give to a question about healthcare. If the answer is wrong, the intelligent tutor will help guide users through the hallmarks of a Libertarian viewpoint and how it might sync with this specific question.

Easterday acknowledged there is more to examine on this topic of intelligent tutors and policy education, but here is a place for AI in the future of education.

"Tutoring requires really well-defined problems, and it doesn't seem like we should be able to do this (with) policy," Easterday said. "In fact, we can simplify things in such a way that it makes it amenable to tutoring."