Connoisseurs of Touch

Northwestern Engineering researchers break through the touchscreen technology, putting tactile information at users' fingertips. Literally.

With the release of its revolutionary IPhone in 2007, Apple ushered in the era of multitouch touchscreens, enabling users to interact with their phones with pinches, swipes, and taps. Despite the growing popularity of the ubiquitous touchscreen, there's an undeniable irony about the name: touchscreens don't offer much of anything to touch.

“We say ‘touch’ in ‘touchscreen,’ but that’s only half of touch,” says Northwestern Engineering’s J. Edward Colgate. “You’re telling it something, but it’s not telling you much back.”

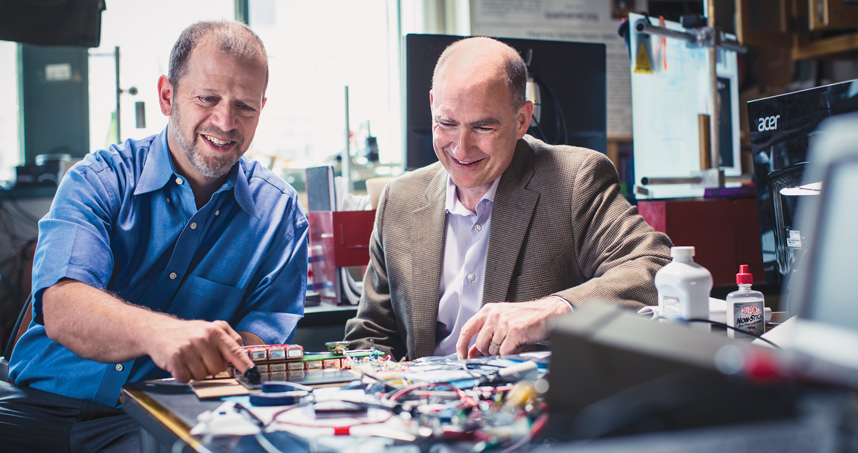

Colgate and his longtime collaborator Michael Peshkin, both professors of mechanical engineering at Northwestern, consider themselves “connoisseurs of touch” and aim to bring tactile feedback to our digital interfaces. They think touchscreens should give you the same physical satisfaction as buttons that click and toggles that snap.

Peshkin points to his 35-year-old HP25 calculator with protruding rectangular buttons and a long, slender display. “You feel those keys and you realize that someone put considerable effort into making them feel efficient,” he says. “You know when you’ve pushed them. People so quickly forget that’s something of value.”

If this seems fairly esoteric, consider that touch is one of our five basic senses and provides critical information for managing almost every aspect of our lives. Touch helps us make our way through dark rooms and adjust the car radio without looking at the dial. It tells us whether something is too cold or too hot, clean or dirty, hard or soft, not to mention the role it plays in personal relationships, enabling us to comfort, nurture, and communicate with others.

TOUCHING EXPERIENCES

To make digital touch a reality, Colgate and Peshkin have spent years developing technology that makes flat, glossy touchscreens feel bumpy and textured, allowing users to “feel” the scalloped edges of a key or the smooth swipe of a turned page.

Now, they have incorporated this technology in what they call the TPad phone: a standard smartphone nestled in a case that enables physical textures to be communicated through the screen. The unassuming case, which looks like a typical protective cover, houses a lightweight circuit board. A thin glass plate covers the phone’s screen. As your finger slides across the glass, ultrasonic waves in the glass modulate the friction between it and your finger. The technology senses where your finger is, so it can modulate friction in response to your finger’s location relative to whatever is displayed visually. It can make the screen feel sticky, slippery, bumpy, or wavy—or it can create an “edge” effect as your finger moves onto a button or icon.

“The physical effect is really happening,” Peshkin says. “But what your brain does with that is interesting. If you feel bumps on the screen while touching an image of a golf ball, your brain will interpret those bumps as dimples. It’s a pretty powerful illusion.”

THE 'OLD GUYS'

When Colgate and Peshkin began their careers, engineers didn’t use the word “haptics.” Originally from the Greek haptikos, which means “to touch,” haptics was a term mainly used by the psychology community to describe the perceptual system. Both researchers worked in robotics, with a particular interest in touch, but they didn’t define it as such until years later.

“I worked in telemanipulation,” Colgate says. “A telemanipulator typically involves two robots: the ‘master’ that a human grasps and moves like a joystick, and the ‘slave’ that may be far away but mimics the motions of the master. My students and I built a master but ran out of money for the slave, so instead we used a computer program to simulate a ‘virtual’ slave interacting with a virtual world. Unbeknownst to me—or anyone at the time—that was a haptic display.”

In a twist that neither expected, Colgate and Peshkin became pioneers in the haptics field. They have developed three companies together and each holds numerous patents. Colgate also cofounded the Haptics Symposium, which is the field’s oldest conference, and served as the inaugural editor of IEEE Transactions in Haptics, the first scientific journal dedicated to the field.

“We’re recognized as the ‘old guys’ in haptics,” Colgate says. “Come on now,” Peshkin laughs. “That can be said in other ways.”

After 27 years of close collaboration, the pair has developed a playful partnership, replete with teasing, shared triumphs, and productive arguments. Both say they owe their careers to one another, that they wouldn’t have made the same progress on their own.

Peshkin joined Northwestern University in 1987, and Colgate, who is also the Allen K. and Johnnie Cordell Breed Senior Professor in Design, followed suit just one year later. Soon after meeting, they decided to combine forces, taking a literal sledgehammer to the wall between their individual labs to create a shared space. They still share a lab and practically everything else: student researchers, group meetings, grants, and businesses.

Tanvas, formerly called Tangible Haptics, is Colgate and Peshkin’s most recent business venture and is poised to become the most ground breaking. The five-year-old company has already captured the attention of investors, who gave it a $5 million boost in June. The financing will fund the team’s further work into electrostatic technology for haptic touchscreens. Commercial developers and academic researchers can purchase the TPad through Tanvas.

INSPIRING THE NEXT GENERATION

As the “old guys,” Colgate and Peshkin, who is also the Bette and Neison Harris Professor in Teaching Excellence, have developed a vibrant student culture within their shared lab at Northwestern that allows the next generation of researchers to develop haptic technology. PhD candidate Joe Mullenbach says, “Before I came here, I took a tour of the lab and felt the TPad. It was incredible. I knew right then that I wanted to be a part of it.” Mullenbach and fellow student Craig Shultz helped further develop the device into what it is today.

New applications

Mullenbach is working on more features, such as finding ways for the screen to push a finger along the glass, even when that finger is just resting passively on the glass. The group is also developing applications, including TextureShop, the subject of a grant funded by the National Science Foundation. As Photoshop is for pictures, TextureShop will offer users a toolkit of textures and sensations that they can apply to user interface icons, or even to images, on a touchscreen.

“Today, algorithms that we use to go from an image to a touch sensation are very simple,” Mullenbach says. “In the future, these will only get more realistic.”

This realism could potentially even extend to photos we take ourselves. Mullenbach and Shultz developed a “picture loader” application that allows the user to upload a photo or computergenerated image and feel it on the screen, or even snap a new photo and be able to feel it immediately.

New Software

Another PhD candidate, David Meyer, is working on the software component for Mullenbach’s forthcoming TextureShop application. Meyer measured the textures on an array of materials, including wood, carpet, burlap, foam, plastic, and cardboard, and uploaded them into a tool he developed called “Texture Composer.” Users can edit the touch sensation of these textures to make them feel quite different on the screen.

“You can make something rough feel softer,” Meyer explains. “It’s kind of like how Auto-Tune works for music, which can take unpleasant sounds and make them more pleasant.”

Meyer imagines that touch could one day become a regular part of online shopping. Shoppers might be able to touch a blanket or article of clothing on their screens and decide whether or not they like the fabric. And if Craig Shultz has anything to say about it, those shoppers will also be able to knock on a wooden table on their screen to see if it’s solid or hollow.

New Capabilities

Shultz is working on an audio-tactile display for acoustic touch. Made of aluminum and aluminum oxide, the special electrostatic surface turns a finger into a speaker, enabling it to play music. Here’s how it works: The user holds an electrode that is hooked up to a computer. The user then touches an electrostatic surface that has a voltage across it, which alters the friction between the skin and the surface. As the user’s finger slides across the surface, changes in friction cause the skin to vibrate. Those vibrations are the music, which emanates right from the finger. For anyone concerned about the current running through the body, Shultz says it’s about the same amount as in the electrical signals between nerves.

“We recognize objects by their acoustics,” Shultz says. “This project isn’t just about playing music. It could lead to new interfaces that allow you to feel whether something is hollow, metallic, or ceramic.”

New Research

To better understand how haptics works, graduate student Becca Fenton Friesen has been studying the finger and how it interacts with surfaces. She is particularly interested in how the slight bit of air between the fingertip and a surface affects vibrations and other tactile sensations. She has been constructing artificial fingertips to use in experiments in which a human finger cannot be used, such as within a vacuum chamber.

“We originally thought that if it were squishy like a finger, it would work like a finger,” she says. “It turns out that it’s more complicated than that. We’re trying to understand what materials in the artificial fingertip make it work or not on the TPad.”

"We don't yet know all the applications that will be possible with this technology."

— David Meyer, PhD candidate

These projects venture out into the unknown world of digital touch. “It’s like a silent movie,” Meyer says. “Before audio was introduced, people didn’t even know they wanted it. But then audio and video together transformed the world. We don’t yet know all the applications that will be possible with this technology.”

THE FUTURE OF THE FIELD

Colgate, Peshkin, and their students are excited by how far the haptics field has come just within just the past five years. This year’s World Haptics Conference, hosted by Northwestern, attracted a record number of attendees. Another good sign for the field’s future: Apple has started using the word for its products. The new Apple Watch has a “Taptic Engine” that produces haptic feedback in the form of a light tap on the wrist.

“You can go to Apple’s homepage and read about their product and they actually use the word ‘haptic,’” Colgate says. “That’s a very new thing, and we’re all excited about that.”

"I look forward to people becoming connoisseurs of touch, and I think they will."

— Michael Peshkin, Professor of Mechanical Engineering

The team hopes that haptics will become a part of everyday conversation and more people will become “connoisseurs of touch.” Colgate and Peshkin compare it to developing a refined palate. If you’re not trained to taste and talk about wine, chances are that you cannot taste and describe the range of flavors and aromas. They say most people can’t differentiate among different touch sensations because they aren’t used to it and lack the vocabulary to express what they feel.

“Everyone expects their touchscreen to feel flat,” Peshkin says. “It’s possible that we have lost our sense of touch or at least our expectation of being able to feel something on a touchscreen. I look forward to people becoming connoisseurs of touch, and I think they will.”

“Meanwhile, we just want to make this stuff work,” Colgate says. “There are still a lot of problems to solve before this becomes a part of modern commercial electronics, which we desperately want to happen. We’re big believers in haptics.”

ROBOTIC TOUCH

When we manipulate an object with our hands, we can do much more than just pick it up and carry it. We can throw it into the air and catch it, let it slip or rotate within our grasp, push or roll it along a surface, or tap or jiggle it to make small corrective motions. These manipulation modes are enabled by visual feedback and the many sensors we have in our hands and arms. Waves of haptic information help us dynamically manipulate objects without even thinking about it.

“Flexible manipulation is the next grand challenge of robotics." -Kevin Lynch

The current inability of robots to use these manipulation modes limits their usefulness in the human world. Kevin Lynch, professor and chair of mechanical engineering, and his students are bringing robots one step closer to human-level dexterity through the development of ERIN, a state-of-the-art robotic manipulation system.

ERIN consists of a high-speed seven-joint robotic arm with a four-fingered robot hand. Each fingertip is equipped with more than twenty tactile sensors measuring temperature and local contact pressure. ERIN’s visual feedback comes from ten high-speed cameras strategically placed around the robot’s workspace that capture images of objects manipulated by ERIN at 360 frames per second.

“The fingertip tactile sensors provide information on contact forces and fine motion of the object during manipulation, such as slip,” Lynch says. “The vision system tracks the motion of the object. If I throw an object to the robot, the vision tracking system works with a robot arm feedback controller to move the arm into position to catch the object.”

One goal of the research is to produce a robot with human-like dexterity that can operate in human environments. There is still a long way to go. Robots already exceed humans in strength, speed, and precision, but still missing are algorithms that turn sensor data into robot motor commands. Lynch’s team is building a library of algorithms for human-like capabilities, including pushing, rolling, sliding, batting, and throwing and catching objects. “Flexible manipulation is the next grand challenge of robotics,” Lynch says.

Learn more about Lynch’s research and other touch-inspired robotics projects at Northwestern in this short video: